Jentic in Beta: One MCP for every integration

Sean Blanchfield

Estimated read time: 7 min

Last updated: May 26, 2025

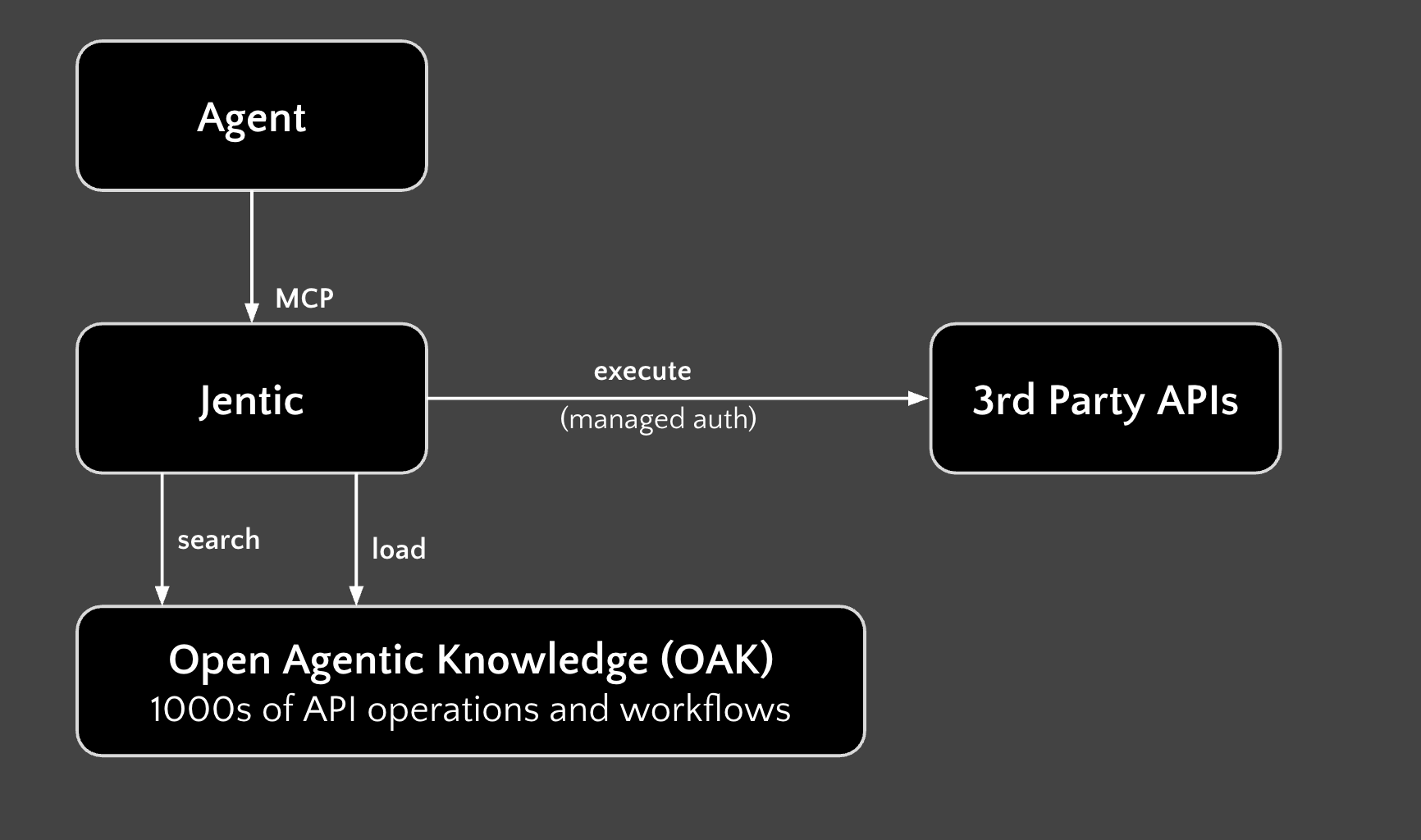

Today we’re excited to release the first version of Jentic in public beta. This is a Just-In-Time-Tooling engine for agents, providing seamless access to the entire Open Agentic Knowledge (OAK) repository of thousands of API tools and workflows through a single integration. With Jentic, you don't need thousands of remote MCP servers - you just need one.

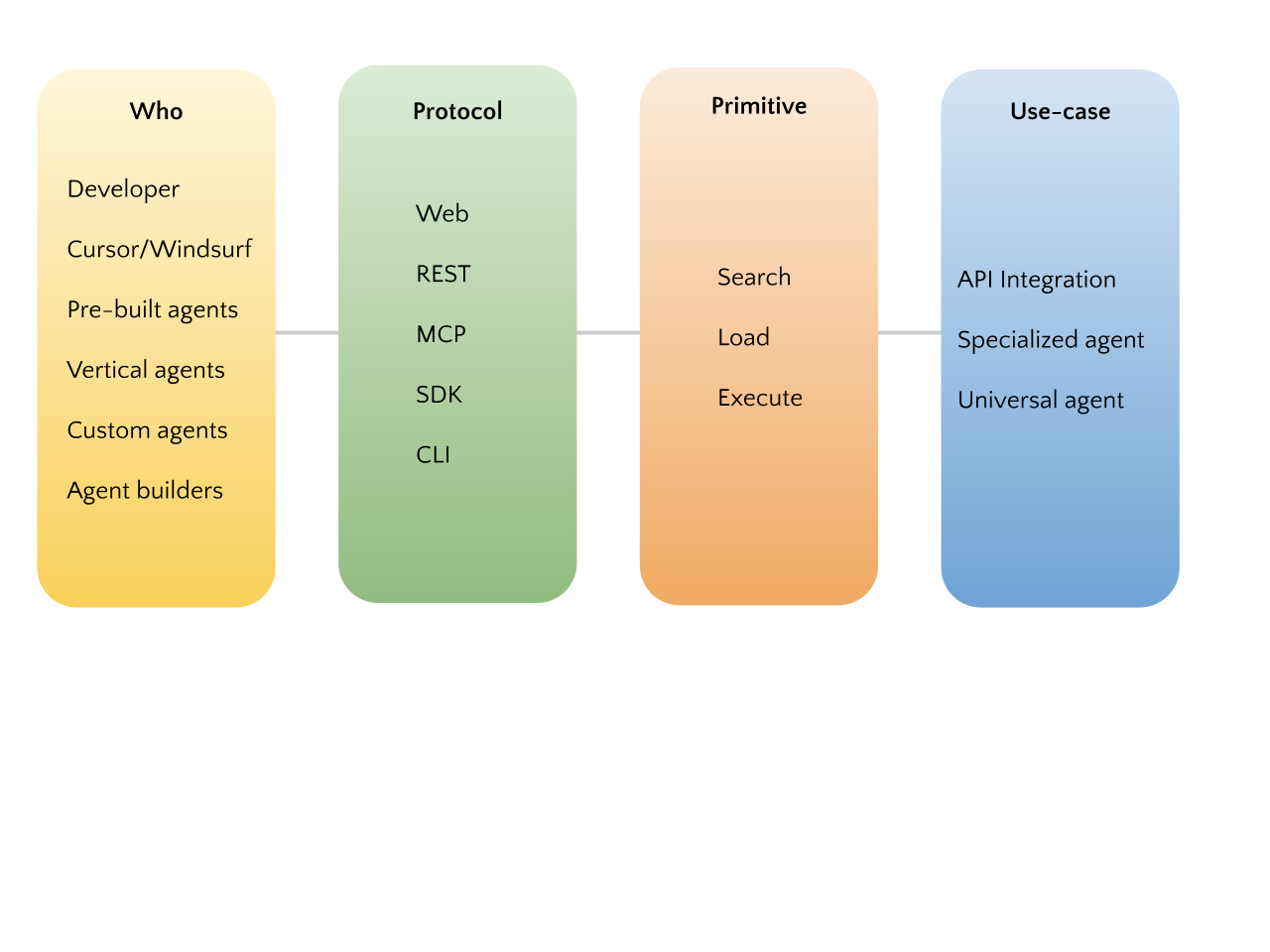

You can hook Jentic up via MCP, SDK, REST, CLI or Web app, and can use it in your coding agent or your bespoke agents to:

- One-shot complex integration code

- Automate tooling in your specialized agents

- Build universal agents with Just-In-Time-Tooling

- Ask Cursor or Windsurf about the news

Access is open to all. You or your agent can anonymously use Jentic with basic rate-limiting, or you can register your email address for higher-rate limits. We may activate a wait-list depending on system load, but we'll work hard not to let this happen. We'd welcome all your feedback on your experience trying this out (join us on Discord here), and we’d love to hear about any issues and feature requests. If there is an API or workflow missing you can let us know by raising an issue and our bots will automatically import the API or create the workflow in the open-source OAK repository.

Read on for more information about how this works, why we're building this and what's coming next. You can also head right over to the Install page to try it out, or watch this video demo of Jentic in action.

Jentic and MCP

Anthropic launched Model Context Protocol in late-November as the "USB-C for AI applications", a standard way of connecting AI agents to different types of external tools. MCP had a slow start until mid-February, when Windsurf and Cursor added support, quickly followed by the MCP-native Claude Code, and then adoption by competitor OpenAI within a month. The AI community is now brimming with MCP mania, and everyone has zoomed in on the infinite capabilities unlocked by adding tools and APIs to agents. MCP marketplaces exist with thousands of remote MCP servers of uncertain provenance. The simplicity of MCP is great, but tightly coupling your agent to lots of MCPs hits a performance wall pretty fast, and is a hot mess from a security and management perspective (link, link, link, link).

This friction arises surprisingly early. Agent performance degrades rapidly as you add more tools. Accurate tool use depends on detailed descriptions, but having a big chunk of the context window dedicated to a long list of detailed tool documents is challenging. The LLM's attention becomes thinly spread, vital details about each tool get lost in the middle, and your agent starts calling the wrong tool, calling the right tool in the wrong way or hallucinating parameters (see Sierra's τ-bench paper for discussion of failure modes). MCP mania is now giving way to a frustrating reality: front-loading lots of tools doesn't scale (see our previous post “The MCP Tool Trap” for a more detailed discussion).

The solution is an architecture that allows agents to grab tools on-demand, instead of carrying all their tools around all the time; an AI-era equivalent of service discovery or late function binding. By loading detailed information just-in-time, the relevant tool information is always at the front of the context window, benefiting from focused LLM attention, while keeping the context window as clean as possible. We call this Just-In-Time-Tooling (JITT), and it is about giving agents the right tool at the right time, every time. (See our earlier post on Just-In-Time-Tooling”).

JITT turns tool calling from a code execution problem into a data retrieval problem, and benefits from tools being represented as data instead of code. In recognition of this, and the additional benefits of taking a community approach to shared problem, we previously released the Open Agentic Knowledge (OAK) repository as a centralized corpus of structured APIs and workflows documentation that can be leveraged in AI applications.

Usage Scenarios

You can use Jentic SDK, CLI or MCP for:

-

Old-fashioned API calls, like calling an API operation or workflow. Jentic makes this easy to work with APIs and workflows, whether from code, command line or MCP. Developers use the webapp and coder agents use the MCP to find the right API operations or workflows, then invoke them by ID at runtime. Your agent can also just execute via CLI or MCP if you have a one-off requirement, like geo-locating an IP address or finding out if it's going to rain tomorrow.

-

Specialized agents, which use a small pre-selected toolset. Jentic makes it trivial to mix-and-match anything in OAK into tool definitions to be used with OpenAI or Anthropic or a raw LLM API. Typically, you or your agent will select APIs and workflows at design time, then store these in your project in Jentic's JSON format. Jentic's SDK can export this as OpenAI or Anthropic-compatible tool definitions (and the Jentic SDK also executes the toolcalls for you). You can also make this work even when your LLM doesn't have an official tool API (or if you don't want to use it).

-

Universal agents, which use a flexible toolset. The agent searches Jentic at runtime to find the right tools for each job. This kind of agent has no pre-selected tools, at least not in its code or configuration. It searches, loads and executes tools in a pure Just-In-Time-Tooling architecture.

Putting all this together, here are some illustrative examples of things you can achieve with Jentic:

- Use the Jentic CLI to geolocate an IP address

- Find an API in Jentic’s web app and hand-code a simple weather app to use it via Jentic’s SDK

- Save time, and get your coder agent to use Jentic MCP and SDK to one-shot a weather app

- Ask your coder agent to tell you if it's going to rain tomorrow

- Ask a prebuilt agent (e.g., Claude Desktop) to tell you if it's going to rain tomorrow

- Hand-code a universal agent that searches, loads and executes tools via Jentic's REST API (possibly in conjunction with an agent framework like LangChain, LlamaIndex, CrewAI, Autogen etc)

- Get your coder agent (Cursor etc) to use Jentic's MCP create a universal agent

- Give a vertical agent extra superpowers by adding Jentic's MCP

- Create a no-code universal agent using your favorite agent builder platform with Jentic's MCP.

- Create a specialized agent by pre-selecting OAK tools and workflows, hooking them up to your LLM's official toolcalling API via Jentic's SDK.

- Create a specialized agent by passing a mix-and-match toolset from OAK directly to your LLM via Langchain, and execute resulting toolcalls using Jentic's SDK.

What’s next

We're excited to be rapidly building out new features. We will be releasing regular updates to unlock the following for our users:

-

Hosted execution. Today, you can execute anything in OAK using the open-source

oak-runnerlibrary. This is great if you're in an environment where you can install python dependencies (e.g., Cursor, Windsurf, Claude Code, Codex etc) but it's a bit of extra work, and a bit inconvenient with an off-the-shelf agent like Claude Desktop, or agent platforms like Amazon Bedrock. We will fix this by shortly offering an optional hosted execution service that will execute API workflows and operations for you. -

Managed authentication and authorization. In the coming months we will release a managed authentication layer, allowing you to centrally manage all agent credentials and permissions, removing that high-stakes code and configuration from your agents.

-

Private API support. Publish your self-hosted infrastructure and microservice architecture into Jentic, allowing both public and private APIs and workflows to be provisioned to your agent fleet and managed in one place.

In Summary...

Let's move beyond the era of front-loaded tools. Production-ready agents need scalability, capability, reliability, security and manageability.

We are taking an opinionated but well-informed approach grounded in proven engineering principles: dynamic resolution, decoupling data from code, and collaborating through well-accepted open standards.

Jentic provides the infrastructure to implement this robust pattern, acting as an intelligent Just-In-Time-Tooling engine for your agents. Stop burdening agents with frontloaded tools; empower them with dynamic access to the capabilities they need, precisely when they need them.