Just-In-Time-Tooling: Scalable, Capable and Reliable Agents

Sean Blanchfield

Estimated read time: 5 min

Last updated: May 26, 2025

This post builds on our earlier discussion of why front-loading tools with MCP doesn't scale.

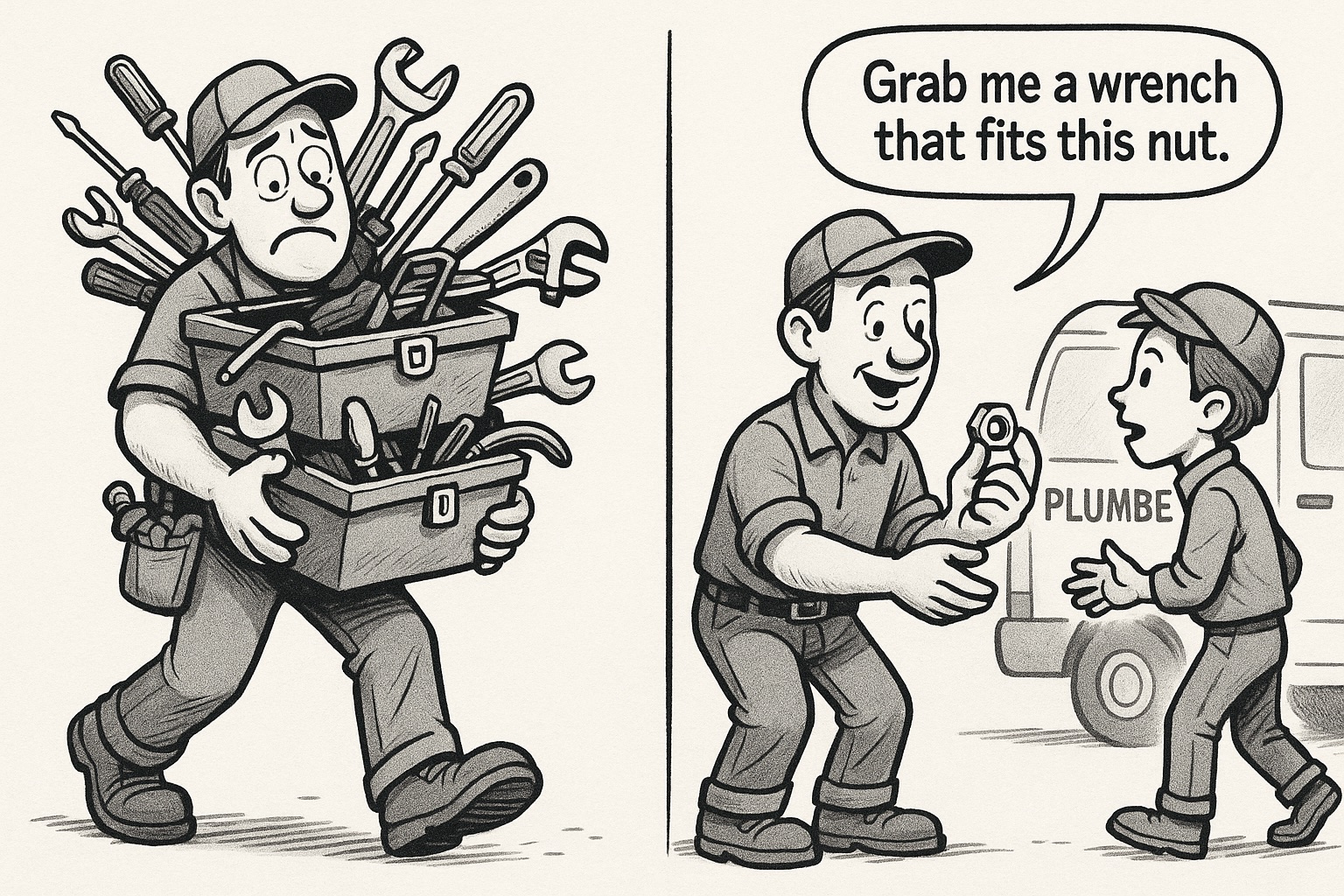

The first wave of agent tooling has treated integration as a static design-time concern. You pick your tools in advance, load them into the prompt or an MCP manifest, and hope for the best. This works… to a point.

In a previous post we talked about how preloading tools into the context window quickly runs into intractable limits. Performance drops. Hallucinations rise. Context gets cluttered. Maintenance becomes unmanageable. The more capable your agent is supposed to be, the more brittle it gets.

It’s time to move past pre-loading. Tooling should be dynamic.

What is Just-In-Time-Tooling?

Just-In-Time-Tooling (JITT) is a scalable agent architecture pattern. Instead of front-loading a big list of tool definitions into your agent ahead of time, you put the tools in a RAG, and let the agent:

- Search for the right tool based on its current intent

- Load detailed, machine-readable documentation on demand

- Execute the tool with generated parameters and evaluate the result

This decouples tool knowledge from agent reasoning, putting unlimited broad and deep tool knowledge within arm’s reach without derailing the LLM’s attention.

The JITT pattern is flexible and can be used both for specialized agents that have narrow pre-selected capabilities or universal agents that discover and use tools at runtime. It can be thought of as an AI-analog for late-binding dependencies in application code, or the Service-Oriented-Architecture / Enterprise Service Bus architectural pattern for distributed systems.

Where traditional RAG augments language models with document knowledge, JITT augments them with executable capability. Instead of pulling in facts, you're pulling in tool specs—right when you need them.

Tools as Data

A key enabler is to recognize that tools should be represented as data, not code.

Most agents today still define tools in code as functions — tightly coupled to a specific runtime, framework or LLM provider. This creates brittle dependencies and poor interoperability. Adding a new tool needs new code, and posting to another framework requires a rewrite.

Instead, we can treat tools as structured data: declarative, machine-readable specifications that describe the tool's behavior, parameters, authentication, and effects. Think OpenAPI or Arazzo. These schemas turn tools into portable knowledge artifacts that can be searched, ranked, loaded, and executed by any agent — regardless of language, framework or LLM.

When you represent tools as data you get:

-

data-driven design: agents reason over tool specs like they reason over prompts or documents.

-

retrievability: semantically search and rank tools based on task goals, without preloading all options.

-

platform independence: the same tool spec can be used by LangChain, LlamaIndex, your in-house framework, or by the next big thing.

-

interoperability: established standards like OpenAPI give you compatibility with thousands of existing tools and clients.

-

low integration overhead: adding a tool is a data operation, not a software deployment.

-

bigger community: share and optimize common tools with the wider community, across different platforms and frameworks.

Agents should not contain tool code — they should query tool knowledge.

This is what powers Just-In-Time-Tooling. Instead of binding tools statically at design time, agents resolve the right tools dynamically at runtime. And because tool specs are structured and portable, they can be retrieved, injected, and executed in any agent pipeline.

The Open Agentic Knowledge (OAK) repository is built on this philosophy: tools are data-first, open, and universally consumable. OAK is an open-source, standards-based corpus of API operations and workflows—designed for agents. It contains thousands of OpenAPI and Arazzo specs for real-world APIs and composable workflows, optimized for LLM consumption. OAK is the knowledge layer your agent needs to power a tool-focused RAG system.

The benefits of JITT

Implementing the Just-In-Time-Tooling architecture brings the following benefits:

- Unlimited capabilities: Agents aren’t limited to what fits in context — they can call anything, if they can find it.

- Focused attention: Your LLM focuses attention on the salient details at all times when generating tool calls or evaluating results.

- Better reasoning: Reduces tool token consumption in the context window, leaving more space for reasoning and task memory.

- Speed: Focused tool discovery and execution means agents get it right first time more often, resulting in faster execution, less token consumption and lower costs.

- Data-driven tool management: Tools can be added, removed, updated, and ranked without touching your agent’s code.

- Simpler agents: Your agent just learns to search, load, and call. It doesn't need to memorize the universe. No more custom tool code and tool prompts.

- Universal agent: A single agent can be designed to perform diverse tasks with no hard-coded tool knowledge, becoming a universal agent or powerful general-purpose AI assistant.

Just-in-time-tooling transforms tool integration from a brittle, complex task into a managed, scalable process.It’s a practical design pattern that cleanly separates capability resolution from task reasoning.

Jentic Search = Hosted RAG Over OAK

If you’re building this pattern yourself, you’ll need to:

- Ingest API specs (OpenAPI, Arazzo, etc.)

- Embed and index them for semantic search

- Handle search, ranking, retrieval, and filtering

- Inject results into your agent’s context at the right moment

We built Jentic to make this easy. It’s a fully operational JITT pipeline out of the box: hosted RAG over tools, built on open standards, with full support for secure execution and credential management. For more information, check out the [launch post] or head over to [our installation instructions].

Let’s move beyond front-loading tools into ages and start building agents with capabilities that scale.