The MCP Tool Trap

Sean Blanchfield

Estimated read time: 6 min

Last updated: May 26, 2025

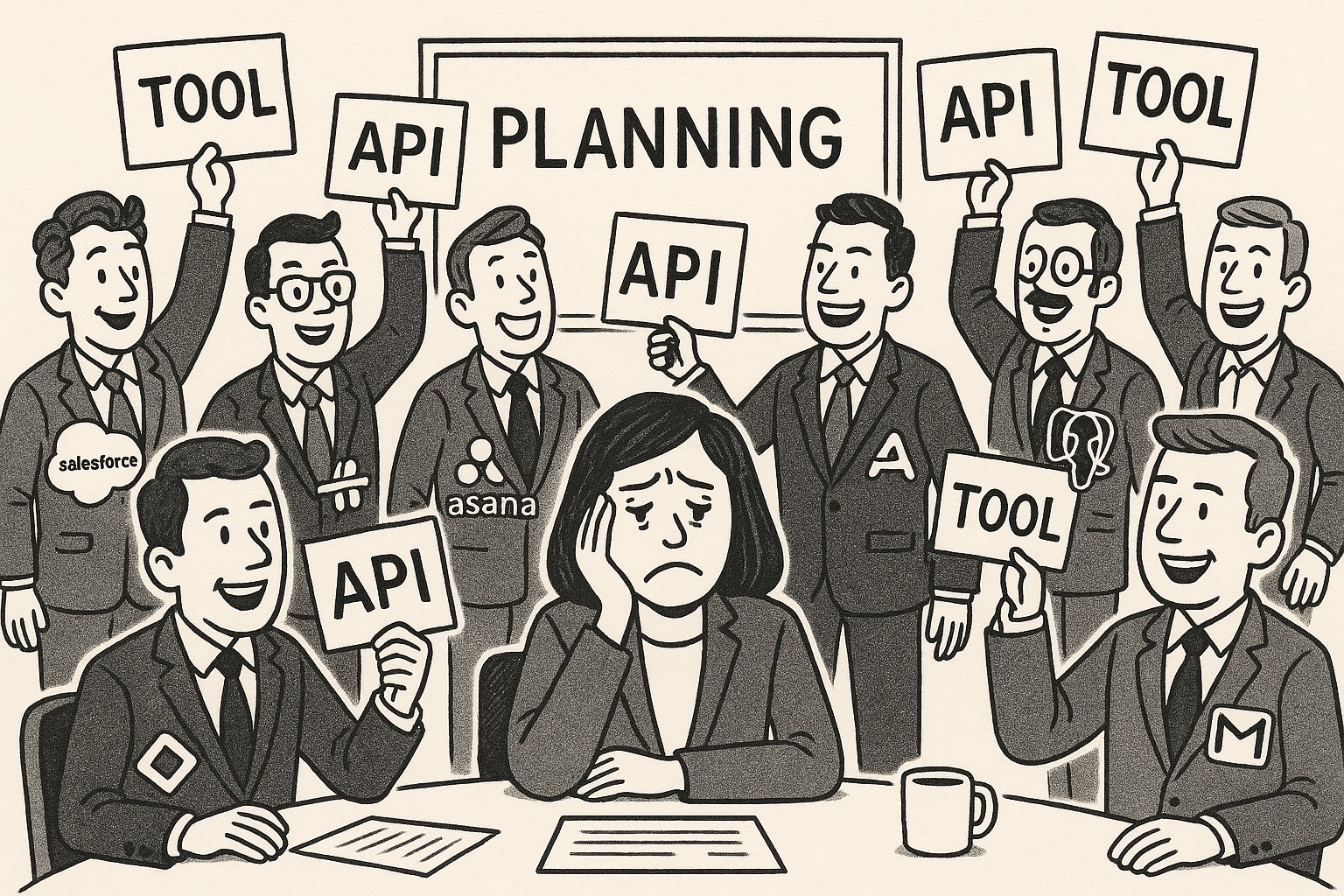

Why Front-Loading Tools Doesn’t Scale

We believe agents are the future of software, that agents run on APIs, and that agents are limited only by the tools you give them. The promise of general-purpose agents is tantalising, but increasing agent capabilities by stuffing tool information into the context window isn't scaling. Whether you are handcrafting tool information into your system prompt, loading tool definitions into your LLM's tool API or loading an MCP manifest, you don't get far. You start feeling the decline in tool calling accuracy surprisingly early, after adding just a handful of tools. After this point, you face a perverse situation in which trying to improve tool calling reliability by providing extra tool detail backfires. The core reasons are:

- Token Bloat: Every tool description consumes valuable context window tokens needed for agent reasoning and task memory. If your tool descriptions are detailed, it doesn't take many tools to crowd the context, which impairs performance and increases agent retries and token consumption. If your only solution to a reliability problem is to reduce the detail in your tool descriptions, then you are in a lose-lose situation.

- Declining Accuracy: A large toolset spreads the LLM's attention thinly between many options, raising the probability of incorrect tool selection or parameter hallucination. The LLM faces too many choices, ambiguity between different tool options, and a general loss of attention in the middle. This leads to the agent selecting the wrong tool, invoking a tool incorrectly or with hallucinated parameters, or misinterpreting the response. See the “Less is More” paper for a deeper discussion of how tool calling accuracy declines as more tools are added to context. Using models with large context windows to scale tool-use could actually worsen the attention problem, overloading the model’s attention with even more options, as well as introduce extra cost.

- Declining Reasoning: If tools are crowding out the context window, there's less room for context about the current project or goal, and the agent's chain of thought. Vital information might scroll out of the window, leading to mistakes. Even with large context window models, mixing a lot of tool information into the context window creates room for confusion. As much of the context window as possible should be reserved for project context and reasoning traces, not information about tools that may not even be required.

- Maintenance Burden: Managing even a handful of tightly-coupled tools becomes a chore. APIs evolve and the performance of tool descriptions often regresses with new LLM versions, leading to brittle, error-prone integrations.

- Security Vulnerabilities: Embedding credentials (API keys, tokens, passwords) directly within JSON manifests, tool code, or prompts is an anti-pattern. This practice dramatically expands the agent's attack surface, scattering secrets across potentially insecure locations (context windows, code repositories, shared documents). Mapping the proliferation of secrets as agents spread across your org is seriously challenging, and revoking secrets without unplanned agent downtime becomes impossible.

The Mirage of Multi-Agent Workarounds

One way to navigate these issues is to lower ambitions, and to only build agents that use a few tools. That's fine, but it leaves a lot on the table. Another approach is to build a multi-agent architecture that splits your agent up into mini-agents that each specialize on a subset of tools. But this just kicks the problem down the road until you hit new context window limits when you try to get your mini-agents to route internal tool calls or intents. And in any case, it's a shame to significantly complicate your architecture just to scale tool use.

5000 MCP Servers and Counting

MCP is a big step forward for agent tooling, but does not solve these problems. Developers (and presumably some hackers) are posting hundreds of new MCP servers every day on to MCP directories like Smythery and Glama (5000+ MCPs and counting). Are AI devs supposed to front-load all these into their agents? This doesn't scale for the reasons stated above, but has other downsides like:

- The "S" in MCP stands for "Security". This recent joke underscores that untrusted MCP servers will see your secrets, and might run arbitrary commands on your PC. Do you trust the author of the MCP server? Do you even have a way to know who they are? One of these days a story will break about an MCP server stealing someone's crypto wallet.

- Most of the thousands of MCP servers are just wrapping APIs, many of which already have nuanced API documentation. MCP simplifies this down to a paragraph per function call, stripping a lot of information along the way. LLMs need this information to make good plans and to reliably execute tool calls.

MCP is a protocol, not a schema.

"MCP is useful when you want to bring tools to an agent you don’t control." - Harrison Chase, CEO of Langchain

MCP provides a valuable, standard mechanism to connect an agent to an external system. But it's a "USB-C" port, not a hard drive; the protocol, not the knowledge layer. It is great at what it is designed for, and performs poorly as a universal knowledge schema. Its natural-language descriptions and basic parameter lists are ergonomic for LLMs, but cannot represent the detailed information described in established API schemas like OpenAPI. Reliable agentic planning and execution might require on-demand details of authentication flows, error handling, complex data types, data governance policies, pricing, rate limits, and workflow logic. This level of detail could not be represented in MCP without compromising its simplicity and ease of use. What makes MCP good for agents makes it poor as a universal API schema.

Architecting Web 4.0

We believe agents will compliment (not replace) the vast distributed infrastructure of websites and web services that already exist. MCP will play an important role connecting agents to the knowledge layer, but will not itself be the knowledge layer. The canonical documentation for each web service should be maintained in whatever format allows relevant detail and nuance to be expressed in an open and machine-readable format, such as OpenAPI for REST services, Arazzo for workflows and maybe even new formats like A2A, ACP or AGNTCY for agents. On the client side, MCP will connect agents to this knowledge.

The knowledge layer is the foundation for high-performing, highly-capable and reliable agents. That’s why we launched OAK—the Open Agentic Knowledge repository. OAK is an open-source, declarative knowledge layer that stores canonical, schematized representations of APIs, workflows, and other machine interfaces. It allows agents to access detailed, AI-optimized information on-demand, while enabling developers and the broader community to collaboratively expand, refine, and govern the tool knowledge agents rely on. It's the missing substrate for scalable, capable and reliable tool use.

And now you can easily plug your agents into OAK over MCP using Jentic. For more information, check out the launch post or head over to our installation instructions.